ffmpeg学习(12)音视频转码(1)使用sws、swr

at 2年前 ca FFmpeg pv 1586 by touch

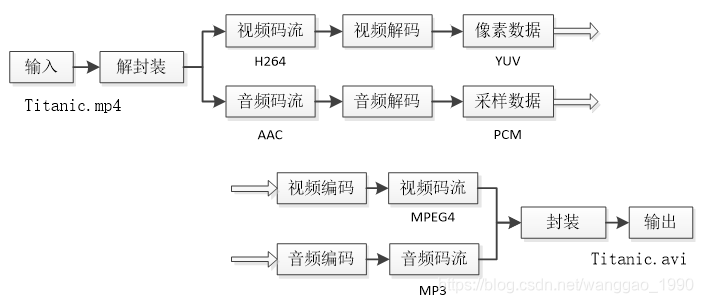

ffmpeg学习(10)音视频文件muxer(1)封装格式转换中介绍了媒体文件的封装格式转换,ffmpeg学习(11)音视频文件muxer(2)多输入混流 中介绍了音视频的混流,本文介绍基于ffmpeg的转码,将一种视频格式(编码格式、封装格式)转换为另一种视频格式,该过程先解码、再编码,以下图为例说明转码流程。

输入视频的封装格式是MP4,视频编码标准是H.264,音频编码标准是AAC;输出视频的封装格式是AVI,视频编码标准是MPEG4,音频编码标准是MP3。首先从输入视频中分离出视频码流和音频压缩码流,然后分别将视频码流和音频码流进行解码,获取到非压缩的像素数据/音频采样数据,接着将非压缩的像素数据/音频采样数据重新进行编码,获得重新编码后的视频码流和音频码流,最后将视频码流和音频码流重新封装成一个文件。

在转码过程中,解码后的非压缩的像素数据/音频采样数据可能需要进行图像变换/重采样才能再送入编码器中进行编码。按如下两种方式分文介绍:

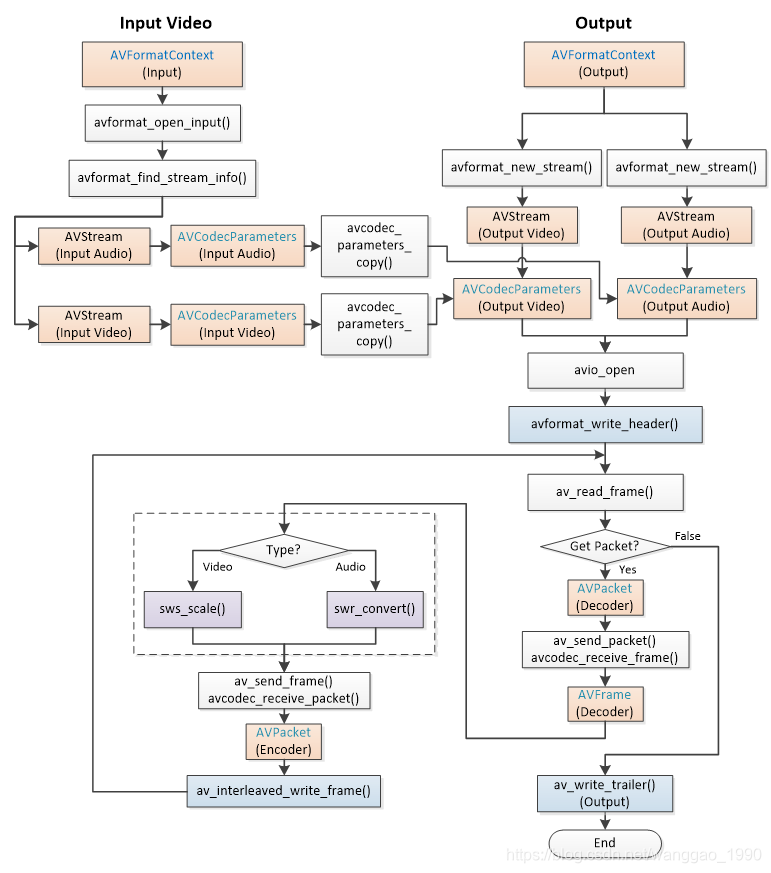

1、使用sws_scale()、swr_convert()函数

先对解码后的非压缩数据先进行转换,再进行编码。转换功能单一,结构流程简单,但是转换代码复杂。

2、使用AVFilterGraph

可根据输入输出的要求创建一个AVFilterGraph,可以实现复杂功能。对解码的每一帧数据进行filter,在将处理的结果进行编码。新加入的AVFilterGraph创建初始化复杂,但是其使用方式简单。

本文先介绍第一种方式,流程图如下

解码再解码流程

/*

转码,非压缩数据转换使用sws_scale()、swr_convert()函数

*/

#include <stdio.h>

#ifdef __cplusplus

extern "C" {

#endif

#include "libavformat/avformat.h"

#include "libswscale/swscale.h"

#include "libswresample/swresample.h"

#include "libavutil/opt.h"

#ifdef __cplusplus

}

#endif

// 输入、输出封装上下文

static AVFormatContext *ifmt_ctx;

static AVFormatContext *ofmt_ctx;

// 编解码器上下文对,便于参数传递

typedef struct StreamContext {

AVCodecContext *dec_ctx;

AVCodecContext *enc_ctx;

} StreamContext;

static StreamContext *stream_ctx; // 数组指针,元素个数对应流个数

static int open_input_file(const char *filename)

{

int ret;

ifmt_ctx = NULL;

if((ret = avformat_open_input(&ifmt_ctx, filename, NULL, NULL)) < 0) {

av_log(NULL, AV_LOG_ERROR, "Cannot open input file %s\n", filename);

return ret;

}

if((ret = avformat_find_stream_info(ifmt_ctx, NULL)) < 0) {

av_log(NULL, AV_LOG_ERROR, "Cannot find stream information\n");

return ret;

}

stream_ctx = (StreamContext *)av_mallocz_array(ifmt_ctx->nb_streams, sizeof(StreamContext));

if(!stream_ctx) {

return AVERROR(ENOMEM);

}

for(unsigned int i = 0; i < ifmt_ctx->nb_streams; i++) {

AVStream *stream = ifmt_ctx->streams[i];

AVCodec *dec = avcodec_find_decoder(stream->codecpar->codec_id);

if(!dec) {

av_log(NULL, AV_LOG_ERROR, "Failed to find decoder for stream #%u\n", i);

return AVERROR_DECODER_NOT_FOUND;

}

AVCodecContext *codec_ctx = avcodec_alloc_context3(dec);

if(!codec_ctx) {

av_log(NULL, AV_LOG_ERROR, "Failed to allocate the decoder context for stream #%u\n", i);

return AVERROR(ENOMEM);

}

ret = avcodec_parameters_to_context(codec_ctx, stream->codecpar);

if(ret < 0) {

av_log(NULL, AV_LOG_ERROR, "Failed to copy decoder parameters to input decoder context "

"for stream #%u\n", i);

return ret;

}

/* Reencode video & audio and remux subtitles etc. */

if(codec_ctx->codec_type == AVMEDIA_TYPE_VIDEO || codec_ctx->codec_type == AVMEDIA_TYPE_AUDIO) {

if(codec_ctx->codec_type == AVMEDIA_TYPE_VIDEO)

codec_ctx->framerate = av_guess_frame_rate(ifmt_ctx, stream, NULL);

/* Open decoder */

if((ret = avcodec_open2(codec_ctx, dec, NULL)) < 0) {

av_log(NULL, AV_LOG_ERROR, "Failed to open decoder for stream #%u\n", i);

return ret;

}

// 去除警告 Could not update timestamps for skipped samples

codec_ctx->pkt_timebase = stream->time_base;

stream_ctx[i].dec_ctx = codec_ctx; // 只处理音视频

}

//stream_ctx[i].dec_ctx = codec_ctx;

}

av_dump_format(ifmt_ctx, 0, filename, 0);

return 0;

}

static int open_output_file(const char *filename)

{

int ret;

ofmt_ctx = NULL;

avformat_alloc_output_context2(&ofmt_ctx, NULL, NULL, filename);

if(!ofmt_ctx) {

av_log(NULL, AV_LOG_ERROR, "Could not create output context\n");

return AVERROR_UNKNOWN;

}

for(unsigned int i = 0; i < ifmt_ctx->nb_streams; i++) {

AVStream *in_stream = ifmt_ctx->streams[i];

AVStream *out_stream = avformat_new_stream(ofmt_ctx, NULL);

if(!out_stream) {

av_log(NULL, AV_LOG_ERROR, "Failed allocating output stream\n");

return AVERROR_UNKNOWN;

}

AVCodecContext *dec_ctx = stream_ctx[i].dec_ctx;

if(dec_ctx->codec_type == AVMEDIA_TYPE_VIDEO || dec_ctx->codec_type == AVMEDIA_TYPE_AUDIO) {

AVCodec *encoder = avcodec_find_encoder(dec_ctx->codec_id);

if(!encoder) {

av_log(NULL, AV_LOG_FATAL, "Necessary encoder not found\n");

return AVERROR_INVALIDDATA;

}

AVCodecContext *enc_ctx = avcodec_alloc_context3(encoder);

if(!enc_ctx) {

av_log(NULL, AV_LOG_FATAL, "Failed to allocate the encoder context\n");

return AVERROR(ENOMEM);

}

/* encoder parameters */

if(dec_ctx->codec_type == AVMEDIA_TYPE_VIDEO) {

/* 输入输出参数一致 */

enc_ctx->height = dec_ctx->height;

enc_ctx->width = dec_ctx->width;

enc_ctx->sample_aspect_ratio = dec_ctx->sample_aspect_ratio;

/* take first format from list of supported formats */

enc_ctx->pix_fmt = encoder->sample_fmts ? encoder->pix_fmts[0] : dec_ctx->pix_fmt;

/* video time_base can be set to whatever is handy and supported by encoder */

enc_ctx->time_base = av_inv_q(dec_ctx->framerate);

}

else{ // dec_ctx->codec_type == AVMEDIA_TYPE_AUDIO

/* 输入输出参数一致 */

enc_ctx->sample_rate = dec_ctx->sample_rate;

enc_ctx->channel_layout = dec_ctx->channel_layout;

enc_ctx->channels = av_get_channel_layout_nb_channels(enc_ctx->channel_layout);

/* take first format from list of supported formats */

enc_ctx->sample_fmt = encoder->sample_fmts ? encoder->sample_fmts[0] : dec_ctx->sample_fmt;

enc_ctx->time_base = AVRational{1, enc_ctx->sample_rate};

}

/* Third parameter can be used to pass settings to encoder */

if((ret = avcodec_open2(enc_ctx, encoder, NULL)) < 0) {

av_log(NULL, AV_LOG_ERROR, "Cannot open video encoder for stream #%u\n", i);

return ret;

}

if((ret = avcodec_parameters_from_context(out_stream->codecpar, enc_ctx)) < 0) {

av_log(NULL, AV_LOG_ERROR, "Failed to copy encoder parameters to output stream #%u\n", i);

return ret;

}

if(ofmt_ctx->oformat->flags & AVFMT_GLOBALHEADER)

enc_ctx->flags |= AV_CODEC_FLAG_GLOBAL_HEADER;

out_stream->time_base = enc_ctx->time_base;

//out_stream->codecpar->codec_tag = 0;

stream_ctx[i].enc_ctx = enc_ctx; // 只处理音视频

}

}

av_dump_format(ofmt_ctx, 0, filename, 1);

if(!(ofmt_ctx->oformat->flags & AVFMT_NOFILE)) {

if((ret = avio_open(&ofmt_ctx->pb, filename, AVIO_FLAG_WRITE)) < 0) {

av_log(NULL, AV_LOG_ERROR, "Could not open output file '%s'", filename);

return ret;

}

}

/* init muxer, write output file header */

if((ret = avformat_write_header(ofmt_ctx, NULL)) < 0) {

av_log(NULL, AV_LOG_ERROR, "Error occurred when opening output file\n");

return ret;

}

return 0;

}

static int encode_write_frame(AVFrame *frame, unsigned int stream_index)

{

AVPacket *enc_pkt = av_packet_alloc();

int ret = avcodec_send_frame(stream_ctx[stream_index].enc_ctx, frame);

while(ret >= 0) {

ret = avcodec_receive_packet(stream_ctx[stream_index].enc_ctx, enc_pkt);

if(ret == AVERROR(EAGAIN) || ret == AVERROR_EOF) {

return ret;

}

else if(ret < 0) {

av_log(NULL, AV_LOG_ERROR, "Error during encoding\n");

exit(1);

}

// prepare packet for muxing

enc_pkt->stream_index = stream_index;

av_packet_rescale_ts(enc_pkt,

ifmt_ctx->streams[stream_index]->time_base,

ofmt_ctx->streams[stream_index]->time_base);

// mux encoded frame

ret = av_interleaved_write_frame(ofmt_ctx, enc_pkt);

av_packet_unref(enc_pkt);

}

av_packet_free(&enc_pkt);

return ret;

}

int main()

{

const char* input_file = "../files/Titanic.mp4";

const char* output_file = "Titanic.flv";

int ret;

if((ret = open_input_file(input_file)) < 0) {

goto end;

}

if((ret = open_output_file(output_file)) < 0) {

goto end;

}

AVPacket *pkt = av_packet_alloc();

AVFrame *frame = av_frame_alloc();

unsigned int stream_index;

while(1)

{

if((ret = av_read_frame(ifmt_ctx, pkt)) < 0)

break;

stream_index = pkt->stream_index;

// 解码

ret = avcodec_send_packet(stream_ctx[stream_index].dec_ctx, pkt);

if(pkt->pts == AV_NOPTS_VALUE) {

printf("========\n");

}

while(ret >= 0) {

ret = avcodec_receive_frame(stream_ctx[stream_index].dec_ctx, frame);

if(ret == AVERROR(EAGAIN) || ret == AVERROR_EOF) {

break;

}

else if(ret < 0) {

av_log(NULL, AV_LOG_ERROR, "Error while receiving a frame the decoder\n");

goto end;

}

// 编码、保存

encode_write_frame(frame, stream_index);

av_frame_unref(frame);

}

av_packet_unref(pkt);

}

// flush

for(unsigned int i = 0; i < ifmt_ctx->nb_streams; i++) {

// flush decoder

ret = avcodec_send_packet(stream_ctx[i].dec_ctx, NULL);

while(ret >= 0) {

ret = avcodec_receive_frame(stream_ctx[i].dec_ctx, frame);

if(ret == AVERROR(EAGAIN) || ret == AVERROR_EOF) {

break;

}

else if(ret < 0) {

av_log(NULL, AV_LOG_ERROR, "Error while receiving a frame the decoder\n");

goto end;

}

// 编码、保存

encode_write_frame(frame, i);

av_frame_unref(frame);

}

// flush encoder

encode_write_frame(NULL, i);

}

av_write_trailer(ofmt_ctx);

end:

av_packet_free(&pkt);

av_frame_free(&frame);

for(unsigned int i = 0; i < ifmt_ctx->nb_streams; i++) {

avcodec_free_context(&stream_ctx[i].dec_ctx);

if(ofmt_ctx && ofmt_ctx->nb_streams > i && ofmt_ctx->streams[i] && stream_ctx[i].enc_ctx)

avcodec_free_context(&stream_ctx[i].enc_ctx);

}

avformat_close_input(&ifmt_ctx);

if(ofmt_ctx && !(ofmt_ctx->oformat->flags & AVFMT_NOFILE))

avio_closep(&ofmt_ctx->pb);

avformat_free_context(ofmt_ctx);

return 0;

}注意以下几点:

1、sws_scale()、swr_convert()函数转换得到的AVFrame的pts是没有的,需要从输入的pts赋值得到。

2、由于延时需要的待转换输入采样数据会增加,重新分配内存空间以保存需要采样数据量。

3、音频采样数据使用swr_convert()函数转换后,写入到输出的采样数据的个数应该为其返回值,即要处理 frame_tmp->nb_samples = ret;,并且写完之后要恢复其实际分配采样数据个数,避免反复分配释放空间。

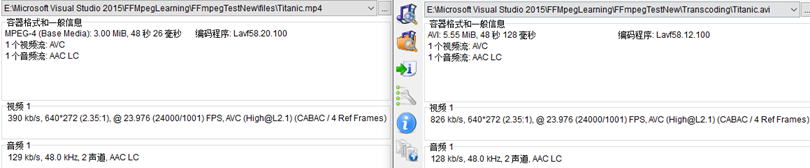

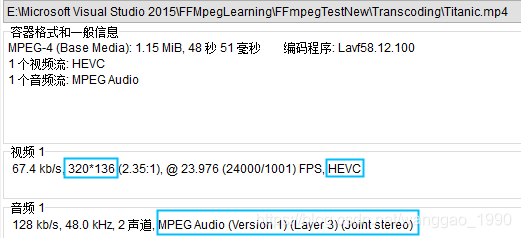

输入、输出的结果截图如下:

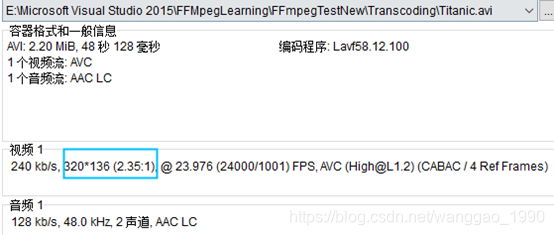

4、视频编码参数可以直接指定输出分辨率为输入的一半

如

/* 输入输出参数一致 */ enc_ctx->height = dec_ctx->height / 2; enc_ctx->width = dec_ctx->width / 2;

5、提示Could not update timestamps for skipped samples错误信息

当前错误提示不影响结果输出,解码器参数设置 codec_ctx->pkt_timebase = stream->time_base; 可以解决。

解码再解码(参数变化)流程

音频修改采样频率,能够保证输出音频的时长与输入时长一致。但是,对于视频帧数固定的输入,仅调整帧率,输出视频帧数不一定保持不变。这个问题可能导致同步处理会有问题因此这里仅使用sws_scale()和swr_convert()转码,不涉及视频帧率、采样频率的调整。

这里的转码,修改视频编码为为H265,输出分辨率减半;音频调整为MP3编码(frame size从AAC 的1024 调整到1152)。

/*

转码,非压缩数据转换使用sws_scale()、swr_convert()函数

*/

#include <stdio.h>

#ifdef __cplusplus

extern "C" {

#endif

#include "libavformat/avformat.h"

#include "libswscale/swscale.h"

#include "libswresample/swresample.h"

#include "libavutil/opt.h"

#ifdef __cplusplus

}

#endif

// 输入、输出封装上下文

static AVFormatContext *ifmt_ctx;

static AVFormatContext *ofmt_ctx;

// 编解码器上下文对,便于参数传递

typedef struct StreamContext {

AVCodecContext *dec_ctx;

AVCodecContext *enc_ctx;

SwsContext *sws_ctx;

AVFrame *frame_v;

SwrContext *swr_ctx;

AVFrame *frame_a;

} StreamContext;

static StreamContext *stream_ctx; // 数组指针,元素个数对应流个数

static int open_input_file(const char *filename)

{

int ret;

ifmt_ctx = NULL;

if((ret = avformat_open_input(&ifmt_ctx, filename, NULL, NULL)) < 0) {

av_log(NULL, AV_LOG_ERROR, "Cannot open input file %s\n", filename);

return ret;

}

if((ret = avformat_find_stream_info(ifmt_ctx, NULL)) < 0) {

av_log(NULL, AV_LOG_ERROR, "Cannot find stream information\n");

return ret;

}

stream_ctx = (StreamContext *)av_mallocz_array(ifmt_ctx->nb_streams, sizeof(StreamContext));

if(!stream_ctx) {

return AVERROR(ENOMEM);

}

for(unsigned int i = 0; i < ifmt_ctx->nb_streams; i++) {

AVStream *stream = ifmt_ctx->streams[i];

AVCodec *dec = avcodec_find_decoder(stream->codecpar->codec_id);

if(!dec) {

av_log(NULL, AV_LOG_ERROR, "Failed to find decoder for stream #%u\n", i);

return AVERROR_DECODER_NOT_FOUND;

}

AVCodecContext *codec_ctx = avcodec_alloc_context3(dec);

if(!codec_ctx) {

av_log(NULL, AV_LOG_ERROR, "Failed to allocate the decoder context for stream #%u\n", i);

return AVERROR(ENOMEM);

}

ret = avcodec_parameters_to_context(codec_ctx, stream->codecpar);

if(ret < 0) {

av_log(NULL, AV_LOG_ERROR, "Failed to copy decoder parameters to input decoder context "

"for stream #%u\n", i);

return ret;

}

/* Reencode video & audio and remux subtitles etc. */

if(codec_ctx->codec_type == AVMEDIA_TYPE_VIDEO || codec_ctx->codec_type == AVMEDIA_TYPE_AUDIO) {

if(codec_ctx->codec_type == AVMEDIA_TYPE_VIDEO)

codec_ctx->framerate = av_guess_frame_rate(ifmt_ctx, stream, NULL);

/* Open decoder */

if((ret = avcodec_open2(codec_ctx, dec, NULL)) < 0) {

av_log(NULL, AV_LOG_ERROR, "Failed to open decoder for stream #%u\n", i);

return ret;

}

// 去除警告 Could not update timestamps for skipped samples

codec_ctx->pkt_timebase = stream->time_base;

}

stream_ctx[i].dec_ctx = codec_ctx;

}

av_dump_format(ifmt_ctx, 0, filename, 0);

return 0;

}

static int open_output_file(const char *filename)

{

int ret;

ofmt_ctx = NULL;

avformat_alloc_output_context2(&ofmt_ctx, NULL, NULL, filename);

if(!ofmt_ctx) {

av_log(NULL, AV_LOG_ERROR, "Could not create output context\n");

return AVERROR_UNKNOWN;

}

for(unsigned int i = 0; i < ifmt_ctx->nb_streams; i++) {

AVStream *in_stream = ifmt_ctx->streams[i];

AVStream *out_stream = avformat_new_stream(ofmt_ctx, NULL);

if(!out_stream) {

av_log(NULL, AV_LOG_ERROR, "Failed allocating output stream\n");

return AVERROR_UNKNOWN;

}

AVCodecContext *dec_ctx = stream_ctx[i].dec_ctx;

AVCodec *encoder = NULL;

AVCodecContext *enc_ctx = NULL;

if(dec_ctx->codec_type == AVMEDIA_TYPE_VIDEO || dec_ctx->codec_type == AVMEDIA_TYPE_AUDIO) {

/* encoder parameters */

if(dec_ctx->codec_type == AVMEDIA_TYPE_VIDEO) {

//encoder = avcodec_find_encoder(dec_ctx->codec_id);

AVCodecID enc_codec_id = AV_CODEC_ID_HEVC;

encoder = avcodec_find_encoder(enc_codec_id);

if(!encoder) {

av_log(NULL, AV_LOG_FATAL, "Necessary encoder not found\n");

return AVERROR_INVALIDDATA;

}

enc_ctx = avcodec_alloc_context3(encoder);

if(!enc_ctx) {

av_log(NULL, AV_LOG_FATAL, "Failed to allocate the encoder context\n");

return AVERROR(ENOMEM);

}

/* 输入输出参数一致 */

//enc_ctx->height = dec_ctx->height;

//enc_ctx->width = dec_ctx->width;

//enc_ctx->sample_aspect_ratio = dec_ctx->sample_aspect_ratio;

///* take first format from list of supported formats */

//enc_ctx->pix_fmt = encoder->sample_fmts ? encoder->pix_fmts[0] : dec_ctx->pix_fmt;

///* video time_base can be set to whatever is handy and supported by encoder */

//enc_ctx->time_base = av_inv_q(dec_ctx->framerate);

/* 输入输出参数不一致 */

enc_ctx->height = dec_ctx->height / 2;

enc_ctx->width = dec_ctx->width / 2;

//enc_ctx->sample_aspect_ratio = dec_ctx->sample_aspect_ratio;

enc_ctx->pix_fmt = AV_PIX_FMT_YUV420P;

enc_ctx->time_base = av_inv_q(dec_ctx->framerate);

修改帧率,视频文件长短发生变化(或者音视频不能对应)

enc_ctx->framerate = {15,1};

//enc_ctx->time_base = {1,15};

stream_ctx[i].sws_ctx = sws_getContext(dec_ctx->width, dec_ctx->height, dec_ctx->pix_fmt,

enc_ctx->width, enc_ctx->height, enc_ctx->pix_fmt,

SWS_BILINEAR, NULL, NULL, NULL);

stream_ctx[i].frame_v = av_frame_alloc();

stream_ctx[i].frame_v->format = enc_ctx->pix_fmt;

stream_ctx[i].frame_v->height = enc_ctx->height;

stream_ctx[i].frame_v->width = enc_ctx->width;

av_frame_get_buffer(stream_ctx[i].frame_v, 0);

}

else if(dec_ctx->codec_type == AVMEDIA_TYPE_AUDIO) {

//encoder = avcodec_find_encoder(dec_ctx->codec_id);

AVCodecID enc_codec_id = AV_CODEC_ID_MP3;

encoder = avcodec_find_encoder(enc_codec_id);

if(!encoder) {

av_log(NULL, AV_LOG_FATAL, "Necessary encoder not found\n");

return AVERROR_INVALIDDATA;

}

enc_ctx = avcodec_alloc_context3(encoder);

if(!enc_ctx) {

av_log(NULL, AV_LOG_FATAL, "Failed to allocate the encoder context\n");

return AVERROR(ENOMEM);

}

/* 输入输出参数一致 */

//enc_ctx->sample_rate = dec_ctx->sample_rate;

//enc_ctx->channel_layout = dec_ctx->channel_layout;

//enc_ctx->channels = av_get_channel_layout_nb_channels(enc_ctx->channel_layout);

///* take first format from list of supported formats */

//enc_ctx->sample_fmt = encoder->sample_fmts ? encoder->sample_fmts[0] : dec_ctx->sample_fmt;

//enc_ctx->time_base = AVRational{1, enc_ctx->sample_rate};

/* 输入输出参数不一致 */

enc_ctx->sample_rate = dec_ctx->sample_rate; //44100; 修改帧率,视频文件长短发生变化(或者音视频不能对应)

enc_ctx->channel_layout = AV_CH_LAYOUT_STEREO;

enc_ctx->channels = av_get_channel_layout_nb_channels(enc_ctx->channel_layout);

enc_ctx->sample_fmt = AV_SAMPLE_FMT_S16P;

enc_ctx->time_base = AVRational{1, enc_ctx->sample_rate};

stream_ctx[i].swr_ctx = swr_alloc_set_opts(stream_ctx[i].swr_ctx,

enc_ctx->channel_layout, enc_ctx->sample_fmt, enc_ctx->sample_rate,

dec_ctx->channel_layout, dec_ctx->sample_fmt, dec_ctx->sample_rate, 0, NULL);

swr_init(stream_ctx[i].swr_ctx);

stream_ctx[i].frame_a = av_frame_alloc();

stream_ctx[i].frame_a->sample_rate = enc_ctx->sample_rate;

stream_ctx[i].frame_a->format = enc_ctx->sample_fmt;

stream_ctx[i].frame_a->channel_layout = enc_ctx->channel_layout;

stream_ctx[i].frame_a->nb_samples = av_rescale_rnd(dec_ctx->frame_size, enc_ctx->sample_rate, dec_ctx->sample_rate, AV_ROUND_UP);

av_frame_get_buffer(stream_ctx[i].frame_a, 0);

}

/* Third parameter can be used to pass settings to encoder */

if((ret = avcodec_open2(enc_ctx, encoder, NULL)) < 0) {

av_log(NULL, AV_LOG_ERROR, "Cannot open video encoder for stream #%u\n", i);

return ret;

}

if((ret = avcodec_parameters_from_context(out_stream->codecpar, enc_ctx)) < 0) {

av_log(NULL, AV_LOG_ERROR, "Failed to copy encoder parameters to output stream #%u\n", i);

return ret;

}

if(ofmt_ctx->oformat->flags & AVFMT_GLOBALHEADER)

enc_ctx->flags |= AV_CODEC_FLAG_GLOBAL_HEADER;

out_stream->time_base = enc_ctx->time_base;

out_stream->codecpar->codec_tag = 0;

stream_ctx[i].enc_ctx = enc_ctx;

}

else if(dec_ctx->codec_type == AVMEDIA_TYPE_UNKNOWN) {

av_log(NULL, AV_LOG_FATAL, "Elementary stream #%d is of unknown type, cannot proceed\n", i);

return AVERROR_INVALIDDATA;

}

else {

/* if this stream must be remuxed */

ret = avcodec_parameters_copy(out_stream->codecpar, in_stream->codecpar);

if(ret < 0) {

av_log(NULL, AV_LOG_ERROR, "Copying parameters for stream #%u failed\n", i);

return ret;

}

out_stream->time_base = in_stream->time_base;

}

}

av_dump_format(ofmt_ctx, 0, filename, 1);

if(!(ofmt_ctx->oformat->flags & AVFMT_NOFILE)) {

if((ret = avio_open(&ofmt_ctx->pb, filename, AVIO_FLAG_WRITE)) < 0) {

av_log(NULL, AV_LOG_ERROR, "Could not open output file '%s'", filename);

return ret;

}

}

/* init muxer, write output file header */

if((ret = avformat_write_header(ofmt_ctx, NULL)) < 0) {

av_log(NULL, AV_LOG_ERROR, "Error occurred when opening output file\n");

return ret;

}

return 0;

}

static int encode_write_frame(AVFrame *frame, unsigned int stream_index)

{

AVPacket *enc_pkt = av_packet_alloc();

int ret = avcodec_send_frame(stream_ctx[stream_index].enc_ctx, frame);

while(ret >= 0) {

ret = avcodec_receive_packet(stream_ctx[stream_index].enc_ctx, enc_pkt);

if(ret == AVERROR(EAGAIN) || ret == AVERROR_EOF) {

return ret;

}

else if(ret < 0) {

av_log(NULL, AV_LOG_ERROR, "Error during encoding\n");

exit(1);

}

// prepare packet for muxing

enc_pkt->stream_index = stream_index;

av_packet_rescale_ts(enc_pkt,

stream_ctx[stream_index].enc_ctx->time_base,

ofmt_ctx->streams[stream_index]->time_base);

printf(" pts %lld, dts %lld, duration %lld \n", enc_pkt->pts, enc_pkt->dts, enc_pkt->duration);

// mux encoded frame

ret = av_interleaved_write_frame(ofmt_ctx, enc_pkt);

av_packet_unref(enc_pkt);

}

av_packet_free(&enc_pkt);

return ret;

}

static int trans_encode_write_frame(AVFrame *frame, unsigned int stream_index)

{

int ret = 0;

if(stream_ctx[stream_index].enc_ctx->codec_type == AVMEDIA_TYPE_VIDEO) {

AVFrame *frame_tmp = stream_ctx[stream_index].frame_v;

ret = sws_scale(stream_ctx[stream_index].sws_ctx,

frame->data, frame->linesize, 0, frame->height,

frame_tmp->data, frame_tmp->linesize);

if(ret < 0)

return ret;

frame_tmp->pts = frame->pts;

//frame_tmp->pkt_pts = frame->pkt_pts;

//frame_tmp->pkt_dts = frame->pkt_dts;

//frame_tmp->pkt_duration = frame->pkt_duration;

ret = encode_write_frame(frame_tmp, stream_index);

}

else if(stream_ctx[stream_index].enc_ctx->codec_type == AVMEDIA_TYPE_AUDIO) {

AVFrame *frame_tmp = stream_ctx[stream_index].frame_a;

int dst_nb_samples = av_rescale_rnd(

swr_get_delay(stream_ctx[stream_index].swr_ctx, frame->sample_rate) + frame->nb_samples,

frame_tmp->sample_rate,

frame->sample_rate, AV_ROUND_UP);

if(dst_nb_samples > frame_tmp->nb_samples) {

int format = frame_tmp->format;

int chn_layout = frame_tmp->channel_layout;

int sample_rate = frame_tmp->sample_rate;

av_frame_unref(frame_tmp);

frame_tmp->format = format;

frame_tmp->channel_layout = chn_layout;

frame_tmp->nb_samples = dst_nb_samples; // 更新

frame_tmp->sample_rate = sample_rate;

av_frame_get_buffer(frame_tmp, 0);

printf("frame resize %d\n", frame_tmp->nb_samples);

}

//ret = swr_convert_frame(stream_ctx[stream_index].swr_ctx, frame_tmp, frame);

ret = swr_convert(stream_ctx[stream_index].swr_ctx,

(uint8_t **)&frame_tmp->data[0], frame_tmp->nb_samples,

(const uint8_t **)&frame->data[0], frame->nb_samples);

if(ret < 0) {

return ret;

}

frame_tmp->pts = frame->pts;

//frame_tmp->pkt_pts = frame->pkt_pts;

//frame_tmp->pkt_dts = frame->pkt_dts;

//frame_tmp->pkt_duration = frame->pkt_duration;

int tmp_nb_samples = frame_tmp->nb_samples;

frame_tmp->nb_samples = ret; // 重要,实际转码的采样数据个数

ret = encode_write_frame(frame_tmp, stream_index);

frame_tmp->nb_samples = tmp_nb_samples; // 修改回原值,避免反复分配释放空间

}

return ret;

}

int main()

{

const char* input_file = "../files/Titanic.mp4";

const char* output_file = "Titanic.mp4";

int ret;

if((ret = open_input_file(input_file)) < 0) {

goto end;

}

if((ret = open_output_file(output_file)) < 0) {

goto end;

}

AVPacket *pkt = av_packet_alloc();

AVFrame *frame = av_frame_alloc();

unsigned int stream_index;

AVMediaType media_type;

uint64_t frame_idx = 0;

while(1) {

if((ret = av_read_frame(ifmt_ctx, pkt)) < 0)

break;

stream_index = pkt->stream_index;

media_type = ifmt_ctx->streams[stream_index]->codecpar->codec_type;

av_packet_rescale_ts(pkt,

ifmt_ctx->streams[stream_index]->time_base,

stream_ctx[stream_index].dec_ctx->time_base);

// 解码

ret = avcodec_send_packet(stream_ctx[stream_index].dec_ctx, pkt);

if(pkt->pts == AV_NOPTS_VALUE) {

printf("========\n");

}

while(ret >= 0) {

ret = avcodec_receive_frame(stream_ctx[stream_index].dec_ctx, frame);

if(ret == AVERROR(EAGAIN) || ret == AVERROR_EOF) {

break;

}

else if(ret < 0) {

av_log(NULL, AV_LOG_ERROR, "Error while receiving a frame the decoder\n");

goto end;

}

if(media_type == AVMEDIA_TYPE_VIDEO) {

frame_idx++;

// printf("frame index %5lld: pts %lld, pkt_pts %lld, pkt_dts %lld, pkt_duration %lld \n", frame_idx, frame->pts, frame->pkt_pts, frame->pkt_dts, frame->pkt_duration);

frame->pts = frame_idx;

//frame->pkt_pts = frame->pts;

//frame->pkt_dts = frame->pts;

//frame->pkt_duration = stream_ctx[stream_index].dec_ctx->ticks_per_frame;

}

// 编码、保存

//encode_write_frame(frame, stream_index);

// 转换,编码、保存

trans_encode_write_frame(frame, stream_index);

av_frame_unref(frame);

}

av_packet_unref(pkt);

}

// flush

for(unsigned int i = 0; i < ifmt_ctx->nb_streams; i++) {

//if(ifmt_ctx->streams[i]->codecpar->codec_type != AVMEDIA_TYPE_VIDEO)

// continue;

// flush decoder

ret = avcodec_send_packet(stream_ctx[i].dec_ctx, NULL);

while(ret >= 0) {

ret = avcodec_receive_frame(stream_ctx[i].dec_ctx, frame);

if(ret == AVERROR(EAGAIN) || ret == AVERROR_EOF) {

break;

}

else if(ret < 0) {

av_log(NULL, AV_LOG_ERROR, "Error while receiving a frame the decoder\n");

goto end;

}

if(media_type == AVMEDIA_TYPE_VIDEO) {

frame_idx++;

frame->pts = frame_idx;

}

// 编码、保存

//encode_write_frame(frame, i);

// 转换,编码、保存

trans_encode_write_frame(frame, i);

av_frame_unref(frame);

}

// flush encoder

encode_write_frame(NULL, i);

}

av_write_trailer(ofmt_ctx);

end:

av_packet_free(&pkt);

av_frame_free(&frame);

for(unsigned int i = 0; i < ifmt_ctx->nb_streams; i++) {

avcodec_free_context(&stream_ctx[i].dec_ctx);

if(ofmt_ctx && ofmt_ctx->nb_streams > i && ofmt_ctx->streams[i] && stream_ctx[i].enc_ctx)

avcodec_free_context(&stream_ctx[i].enc_ctx);

if(stream_ctx[i].sws_ctx) sws_freeContext(stream_ctx[i].sws_ctx);

av_frame_free(&stream_ctx[i].frame_v);

}

avformat_close_input(&ifmt_ctx);

if(ofmt_ctx && !(ofmt_ctx->oformat->flags & AVFMT_NOFILE))

avio_closep(&ofmt_ctx->pb);

avformat_free_context(ofmt_ctx);

return 0;

}

原文地址 CSDN

版权声明

本文仅代表作者观点,不代表码农殇立场。

本文系作者授权码农殇发表,未经许可,不得转载。